How Claude Shannon invented the future

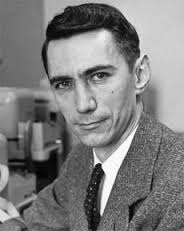

Science seeks the basic laws of nature. Mathematics seeks new theorems based on old ones. Engineering builds systems to solve human needs. The three disciplines are interdependent but distinct. It is very rare for one person to simultaneously make fundamental contributions to all three, but Claude Shannon was a rare person.

Despite being the subject of the recent documentary The Bit Player – and his research work and philosophy having inspired my own career – Shannon is not exactly a household name. He never won a Nobel Prize and was not a celebrity like Albert Einstein or Richard Feynman, neither before nor after his death in 2001. But more than 70 years ago, in a single groundbreaking paper, he laid the foundation for the entire communication infrastructure underlying the modern information age.

Shannon was born in Gaylord, Michigan, in 1916, the son of a local businessman and a teacher. After receiving bachelor’s degrees in electrical engineering and mathematics from the University of Michigan, he wrote a master’s thesis at the Massachusetts Institute of Technology that applied a mathematical discipline called Boolean algebra to the analysis and synthesis of switching circuits. It was a transformative work, turning circuit design from an art to a science, and is now considered to be the starting point of digital circuit design.

Next, Shannon set her sights on an even bigger goal: communication.

Claude Shannon wrote a master’s thesis that launched digital circuit design, and a decade later he wrote his seminal paper on information theory, “A Mathematical Theory of Communication.”

Communication is one of the most basic human needs. From smoke signals to carrier pigeons to telephones and televisions, humans have always sought ways to communicate further, faster and more reliably. But the engineering of communication systems has always been linked to the source and the specific physical medium. Shannon instead wondered, “Is there a grand unified theory for communication?” In a 1939 letter to his mentor, Vannevar Bush, Shannon outlined some of his early ideas on “the fundamental properties of general systems for the transmission of intelligence.” After working on the problem for a decade, Shannon finally published his masterpiece in 1948: “A Mathematical Theory of Communication.”

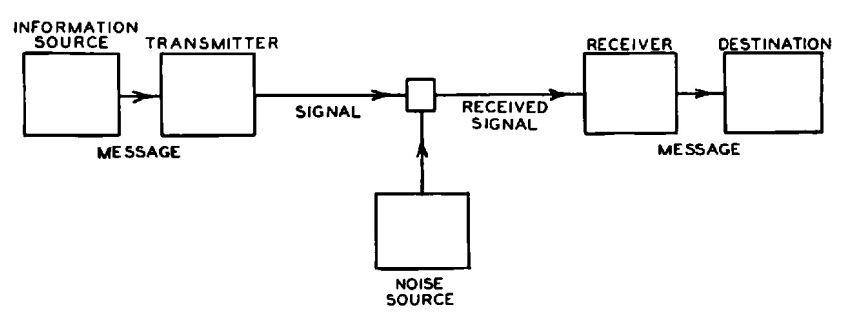

The core of his theory is a simple but very general model of communication: A transmitter encodes information into a signal, which is corrupted by noise and decoded by the receiver. Despite its simplicity, Shannon’s model incorporates two key ideas: isolate the sources of information and noise from the communication system to be designed, and model both sources probabilistically. He imagined that the information source generated one of many possible messages to communicate, each of which had a certain probability. Probabilistic noise added more randomness for the receiver to unravel.

Before Shannon, the problem of communication was seen primarily as a problem of deterministic signal reconstruction: how to transform a received signal, distorted by the physical medium, to reconstruct the original as accurately as possible. Shannon’s genius lies in his observation that the key to communication is uncertainty. After all, if you knew in advance what I was going to tell you in this column, what would be the point of writing it?

Schematic diagram of Shannon’s communication model, excerpted from his paper.

This single observation moved the communication problem from the physical to the abstract, allowing Shannon to model uncertainty using probability. This was a complete shock to the communication engineers of the time.

In this framework of uncertainty and probability, Shannon set out to systematically determine the fundamental limit of communication. His answer is divided into three parts. The concept of the “bit” of information, used by Shannon as the basic unit of uncertainty, plays a fundamental role in all three. A bit, which is short for “binary digit,” can be either a 1 or a 0, and Shannon’s paper is the first to use the word (although he said mathematician John Tukey used it first in a memo).

First, Shannon devised a formula for the minimum number of bits per second to represent information, a number he called the entropy rate, H. This number quantifies the uncertainty involved in determining which message the source will generate. The lower the entropy rate, the lower the uncertainty, and therefore the easier it is to compress the message into something shorter. For example, texting at a rate of 100 English letters per minute means sending one of 26,100 possible messages each minute, each represented by a sequence of 100 letters. All of these possibilities could be encoded in 470 bits, since 2470 ≈ 26100. If the sequences were equally likely, Shannon’s formula would say that the entropy rate is effectively 470 bits per minute. In reality, some sequences are much more likely than others, and the entropy rate is much lower, allowing for more compression.

Second, he provided a formula for the maximum number of bits per second that can be reliably communicated in the face of noise, which he called the system capacity, C. This is the maximum rate at which the receiver can resolve the uncertainty in the message. , which makes it the communication speed limit.

Finally, he showed that reliable communication of source information versus noise is possible if and only if H < C. Thus, information is like water: If the flow rate is less than the capacity of the pipe, the current happens reliably.

Although it is a theory of communication, it is at the same time a theory of how information is produced and transferred: an information theory. That is why Shannon is considered today “the father of information theory”.

His theorems led to some counterintuitive conclusions. Suppose you are talking in a very noisy place. What’s the best way to make sure your message gets through? Maybe repeat it many times? That’s certainly anyone’s first instinct in a noisy restaurant, but it turns out it’s not very effective. Surely the more times you repeat yourself, the more reliable the communication will be. But you’ve sacrificed speed for reliability. Shannon showed us that we can do much better. Repeating a message is an example of using a code to convey a message, and by using different and more sophisticated codes, you can communicate quickly – up to the speed limit, C – while maintaining any degree of reliability.

Another unexpected conclusion that emerges from Shannon’s theory is that, whatever the nature of the information (a Shakespeare sonnet, a recording of Beethoven’s Fifth Symphony, or a Kurosawa film), it is always more efficient to encode it into bits. before transmitting it. Thus, in a radio system, for example, although both the initial sound and the electromagnetic signal sent through the air are analog waveforms, Shannon’s theorems imply that it is optimal to first digitize the sound wave into bits, and then map those bits in the electromagnetic wave. This surprising result is the cornerstone of the modern digital information age, in which the bit reigns as the universal currency of information.

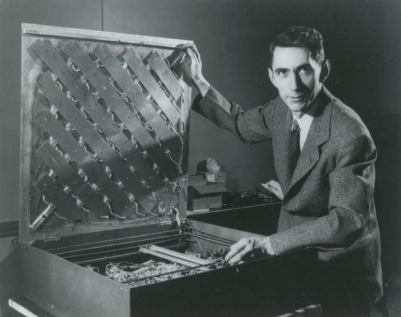

Shannon also had a playful side, which she often brought to her work. Here, he poses with a maze he built for an electronic mouse, named Theseus.

Shannon’s general theory of communication is so natural that it’s as if he discovered the communication laws of the universe, instead of inventing them. His theory is as fundamental as the physical laws of nature. In that sense, he was a scientist.

Shannon invented new mathematics to describe the laws of communication. He introduced new ideas, such as the entropy rate of a probabilistic model, which have been applied in far-reaching mathematical branches, such as ergodic theory, the study of the long-term behavior of dynamical systems. In that sense, Shannon was a mathematician.

But above all, Shannon was an engineer. His theory was motivated by practical engineering problems. And while it was esoteric to engineers of his day, Shannon’s theory has become the standard framework on which all modern communication systems are based: optical, submarine, and even interplanetary. Personally, I have been fortunate to be part of a worldwide effort to apply and extend Shannon’s theory to wireless communication, increasing communication speeds by two orders of magnitude over multiple generations of standards. In fact, the 5G standard currently being rolled out uses not one, but two proven codes of practice to reach the Shannon speed limit.

Although Shannon died in 2001, her legacy lives on in the technology that makes up our modern world and in the devices she created, like this remote-controlled bus.

Shannon discovered the basis for all this more than 70 years ago. How did he do it? Relentlessly focusing on the essential feature of a problem and ignoring all other aspects. The simplicity of his communication model is a good illustration of this style. He also knew how to focus on what is possible, rather than what is immediately practical.

Shannon’s work illustrates the true role of high-level science. When I started college, my advisor told me that the best job was to prune the tree of knowledge, instead of growing it. So I didn’t know what to make of this message; I always thought my job as a researcher was to add my own twigs. But throughout my career, when I had the opportunity to apply this philosophy in my own work, I began to understand it.

When Shannon began studying communication, engineers already had a large collection of techniques. It was his work of unification that pruned all these twigs of knowledge into a single charming and coherent tree, which has borne fruit for generations of scientists, mathematicians, and engineers.